Mixner Consulting

Brad Mixner provides a range of advisory and technology services to law firms, corporations, agencies, and service providers.

Known as an industry thought leader, Mr. Mixner has achieved recognition for his knowledge in such key subjects as cross-border discovery, data privacy, technology implementation/enablement, and the application of big data technology within electronic discovery and litigation support.

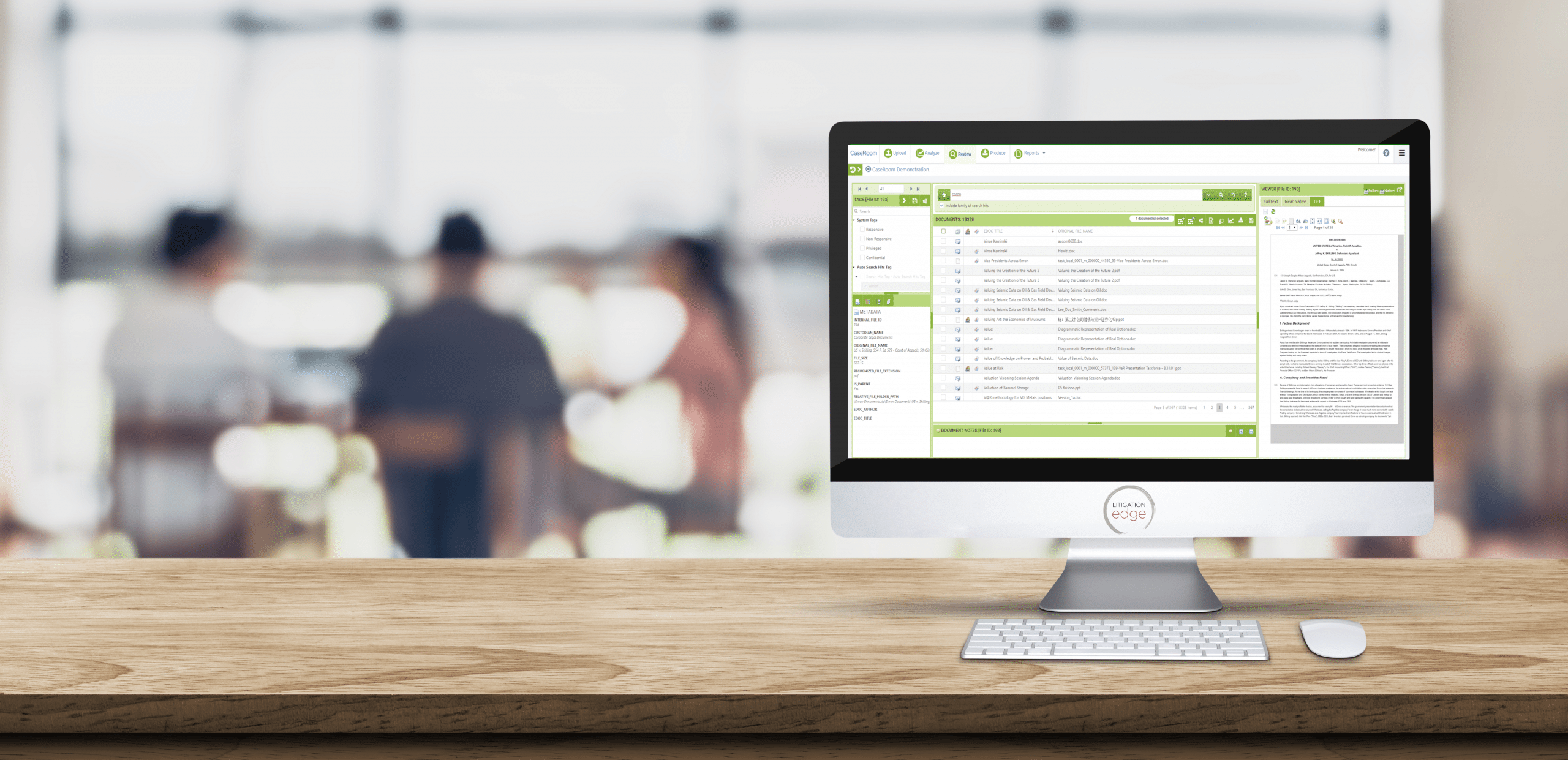

Electronic Discovery

Data Collection, Data Processing, Email Conversion, Document Review, Mobile Phone Review

Data Management

Data Privacy, Analytics, Dynamic Dashboards, Machine Learning, Artificial Intelligence, Natural Language Processing, Semantic Search, Data Visualization

Legal Operations

Deal Rooms, Document Management, Due Diligence, Case Management, Production Management, Practice Management, Document Review, Solution Development

Customer Experience

Solutions Training, Relationship Management, Best Practices, Workflow Design, Enablement/Engagement